Rebrand, multimodal UI support, and more!

Published 2025-06-07

We're renaming ourselves from glhf.chat to Synthetic! It's been a good run with the old glhf.chat domain for nearly a year, but now that we're pretty stable, we think it's probably time to retire our "good luck" branding. You don't need good luck to run LLMs on our infra, it should just work! We're also launching a Discord server, snuck in support for multimodal images in the chat UI, and more.

- Rebrand to Synthetic

- Discord server

- Multimodal image input in the UI

- Bugfixes and reliability improvements

- Future plans

Rebrand to Synthetic

Yup, we're rebranding: instead of glhf.chat (what a mouthful!), we're now Synthetic. Our new website is synthetic.new; the API base URL (for those of you who use it) is api.synthetic.new, although our old API base URLs should also continue to work without interruption.

The rebrand might not come as a huge shock for those of you who have emailed us over the last year (thank you!) and noticed that our personal emails were coming from a mysterious "Synthetic Lab" domain. We've always intended for the product to at some point be called Synthetic; early on, when we'd just launched and GPU reservations were a bit... sketchy, we decided to call the site "glhf" — for "good luck have fun" — and originally didn't even charge money for GPU time or tokens. As we stabilized and improved performance, we eventually launched billing early this year. And we think it's time to finally retire the old branding. We've been launching a lot of the redesigned colors and UI elements over the last couple months, and now we're switching the name, logo, and URL.

Many thanks to our design advisor (who's also Matt's sister) Noa, who helped us redesign the UI and designed our new logo:

The center of the spirograph flower is also a gear, which we think is quite a cute callout to everyone who's trained models, tested them, or generally built cool stuff on top of the open-source LLMs we run. And we hid a neat Easter egg in when you hover over it in the sidebar...

Discord server

We've gotten quite a few emails about starting a Discord server, so... We have one now! We actually secretly made it a few months ago, and were waiting on the rebrand to be ready in order to launch it. Check it out here.

Multimodal image input in the UI

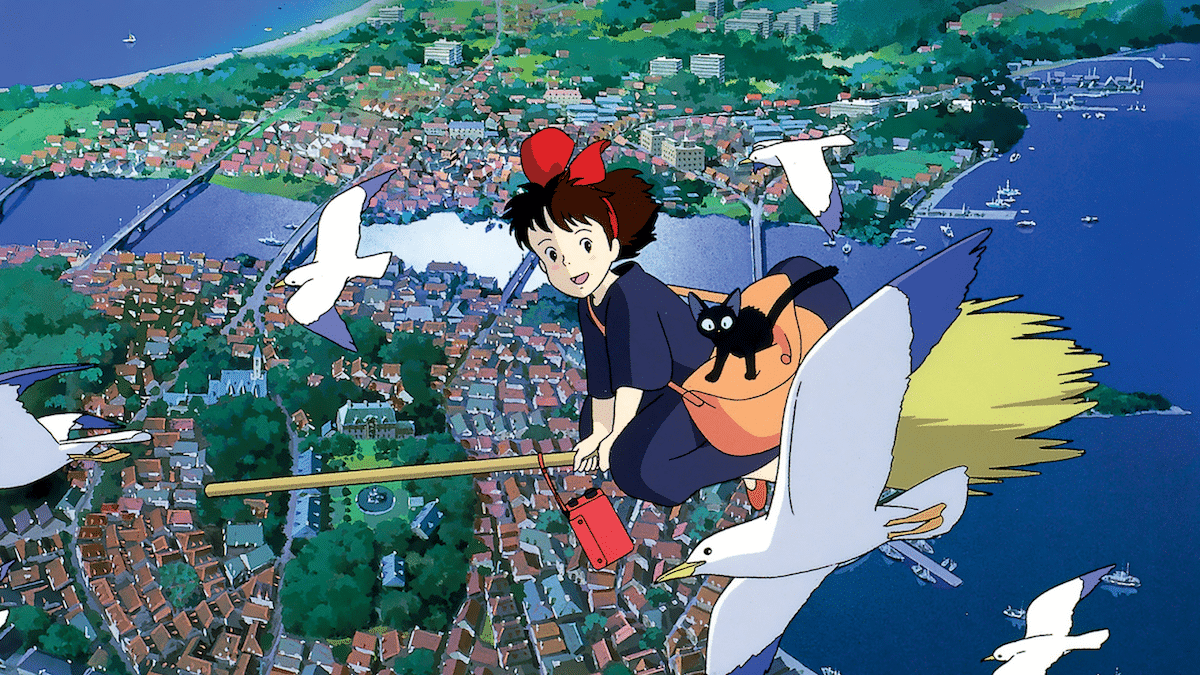

After launching multimodal image input to the API last month, now we've launched it to the UI as well. For LLMs trained on image input, like Meta's Llama 4 series, you can now upload images and ask questions about them! For example, asking Llama 4 Scout to describe this image:

Returns:

Try it out on Llama 4 Scout on Synthetic or other models that support vision.

Bugfixes and reliability improvements

You've probably noticed the site becoming smoother and easier on the eyes over the last month. That's no accident, and we'll keep working on it! We also launched support for the latest update to DeepSeek's reasoning LLM: DeepSeek-R1-0528.

Future plans

If you made it this far: thanks for reading! We're hard at work on more improvements, including but not limited to:

- Shareable chat threads! Sometimes you just want to shoot a chat thread you've been having with a model over to a friend or coworker, and we want to support this easily (opt-in of course, for privacy). It's just not the same to copy/paste the entire thread and try to send it to someone: it's much easier to just send them a link.

- More always-on model support, and support for more base models for always-on LoRAs!

- Dark mode! We've had a lot of requests for this one and it's on our radar. Our designer is working hard at improving the UI, and one of the items on her agenda is a good set of colors for dark mode.

- Better model search and discovery! It's not easy to figure out which Hugging Face models we support or discover new models to try. Most users might not even know we support thousands of models. We're working on making that clearer and easier.

- More backend and UI improvements. Maybe not the most exciting line item, but we're always bugfixing.

If you have any thoughts or feedback, please continue to reach out at [email protected]. We appreciate all the emails we've gotten so far!

— Matt & Billy